Cayley-Hamilton Theorem and Jordan Canonical Form

I was discussing last week with my officemates Hu Fu and Ashwin about the Cayley-Hamilton Theorem. The theorem is the following, given an matrix

we can define its characteristic polynomial by

. The Cayley-Hamilton Theorem says that

. The polynomiale is something like:

so we can just see it as a formal polynomial and think of:

which is an matrix. The theorem says it is the zero matrix. We thought for a while, looked in the Wikipedia, and there there were a few proofs, but not the one-line proof I was looking for. Later, I got this proof that I sent to Hu Fu:

Write the matrix in the basis of its eigenvectors, then we can write

where

is the diagonal matrix with the eigenvalues in the main diagonal.

and since we have

. Now, it is simple to see that:

and therefore:

And that was the one-line proof. One even simpler proof is: let be the eigenvectors, then

, so

must be

since it returns zero for all elements of a basis. Well, I sent that to Hu Fu and he told me the proof had a bug. Not really a bug, but I was proving only for symmetric matrices. More generally, I was proving for diagonalizable matrices. He showed me, for example, the matrix:

which has only one eigenvalue and the the eigenvectors are all of the form

for

. So, the dimension of the space spanned by the eigenvectors is

, less than the dimension of the matrix. This never happens for symmetric matrices, and I guess after some time as a computer scientist, I got used to work only with symmetric matrices for almost everything I use: metrics, quadratic forms, correlation matrices, … but there is more out there then only symmetric matrices. The good news is that this proof is not hard to fix for the general case.

First, it is easy to prove that for each root of the characteristic polynomial there is one eigenvector associated to it (just see that and therefore there must be

, so if all the roots are distinct, then there is a basis of eigenvalues, and therefore the matrix is diagonalizable (notice that maybe we will need to use complex eigenvalues, but it is ok). The good thing is that a matrix having two identical eigenvalues is a “coincidence”. We can identify matrices with

. The matrices with identical eigenvalues form a zero measure subset of

, they are in fact the roots of a polynomial in

. This polynomial is the resultant polynomial

. Therefore, we proved Cayley-Hamilton theorem in the complement of a zero-measure set in

. Since

is a continuous function, it extends naturally to all matrices

.

We can also interpret that probabilistically: get a matrix where

is taken uniformly at random from

. Then

has with probability

all different eigenvalues. So,

with probability

. Now, just make

.

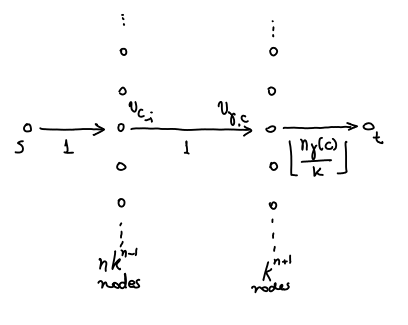

Ok, this proves the Theorem for real and complex matrices, but what about a matrix defined over a general field where we can’t use those continuity arguments. A way to get around it is by using Jordan Canonical Form, which is a generalization of eigenvector decomposition. Not all matrices have eigenvector decomposition, but all matrices over an algebraic closed field can be written in Jordan Canonical Form. Given any there is a matrix

so that:

where are blocks of the form:

By the same argument as above, we just need to prove Cayley Hamilton for each block in separate. So we need to prove that . If the block has size

, then it is exacly the proof above. If the block is bigger, then we need to look at how does

looks like. By inspection:

Tipically, for we have in each row, starting in column

the sequence

, i.e.,

. So, we have

If block has size

, then

has multiplicity

in

and therefore

and therefore,

as we wanted to prove.

It turned out not to be a very very short proof, but it is still short, since it uses mostly elementary stuff and the proof is really intuitive in some sense. I took some lessons from that: (i) first it reinforces my idea that, if I need to say something about a matrix, the first thing I do is to look at its eigenvectors decomposition. A lot of Linear Algebra problems are very simple when we consider things in the right basis. Normally the right basis is the eigenvector basis. (ii) not all matrices are diagonalizable. But in those cases, Jordan Canonical Form comes in our help and we can do almost the same as we did with eigenvalue decomposition.