More about hats and auctions

In my last post about hats, I told I’ll soon post another version with some more problems, which I ended up not doing and would talk a bit more about those kind of problems. I ended up not doing, but here are a few nice problems:

Those

people are again a room, each with a hat which is either black or white (picked with probability

at random) and they can see the color of the other people’s hats but they can’t see their own color. They write in a piece of paper either “BLACK” or “WHITE”. The whole team wins if all of them get their colors right. The whole team loses, if at least one writes the wrong color. Before entering the room and getting the hats, they can strategyze. What is a strategy that makes them win with

probability?

If they all choose their colors at random, the probability of winning is very small: . So we should try to correlate them somehow. The solution is again related with error correcting codes. We can think of the hats as a string of bits. How to correct one bit if it is lost? The simple engineering solution is to add a parity check. We append to the string

a bit

. So, if bit

is lost, we know it is

. We can use this idea to solve the puzzle above: if hats are places with

probability, the parity check will be

with probability

and

with probability

. They can decide before hand that everyone will use

and with probability

they are right and everyone gets his hat color right. Now, let’s extend this problem in some ways:

The same problem, but there are

hat colors, they are choosen independently with probability

and they win if everyone gets his color right. Find a strategy that wins with probability

.

There are again

hat colors, they are choosen independently with probability

and they win if at least a fraction

(

) of the people guesses the right color. Find a strategy that wins with probability

.

Again to the problem where we just have BLACK and WHITE colors, they are chosen with probability

and everyone needs to find the right color to win, can you prove that

is the best one can do? And what about the two other problems above?

The first two use variations of the parity check idea in the solution. For the second case, given any strategy of the players, for each string they have probability

. Therefore the total probability of winning is

. Let

, i.e., the same input but with the bit

flipped. Notice that the answer of player

is the same (or at least has the same probabilities) in both

and

, since he can’t distinguish between

and

. Therefore,

. So,

. This way, no strategy can have more than probability of winning.

Another variation of it:

Suppose now we have two colors BLACK and WHITE and the hats are drawn from one distribution

, i.e., we have a probability distribution over

and we draw the colors from that distribution. Notice that now the hats are not uncorrelated. How to win again with probability

(to win, everyone needs the right answer).

I like a lot those hat problems. A friend of mine just pointed out to me that there is a very nice paper by Bobby Kleinberg generalizing several aspects of hat problems, for example, when players have limited visibility of other players hats.

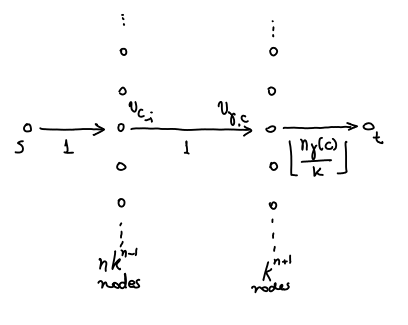

I began being interested by this sort of problem after reading the Derandomization of Auctions paper. Hat guessing games are not just a good model for error correcting codes, but they are also a good model for truthful auctions. Consider an auction with a set single parameter agents, i.e., an auction where each player gives one bid

indicating how much he is willing to pay to win. We have a set of constraints:

of all feasible allocations. Based on the bids

we choose an allocation

and we charge payments to the bidders. An example of a problem like this is the Digital Goods Auction, where

.

In this blog post, I discussed the concept of truthful auction. If an auction is randomized, an universal truthful auction is an auction that is truthful even if all the random bits in the mechanism are revealed to the bidders. Consider the Digital Goods Auction. We can characterize universal truthful digital goods auction as bid-independent auctions. A bid-independent auction is given by function , which associated for each

a random variable

. In that auction, we offer the service to player

at price

. If

we allocate to

and charge him

. Otherwise, we don’t allocate and we charge nothing.

It is not hard to see that all universal truthful mechanisms are like that: if is the probability that player

gets the item bidding

let

be an uniform random variable on

and define

. Notice that here

, but we are inverting with respect to

. It is a simple exercise to prove that.

With this characterization, universal truthful auctions suddenly look very much like hat guessing games: we need to design a function that looks at everyone else’s bid but not on our own and in some sense, “guesses” what we probably have and with that calculated the price we offer. It would be great to be able to design a function that returns . That is unfortunately impossible. But how to approximate

nicely? Some papers, like the Derandomization of Auctions and Competitiveness via Consensus use this idea.