Hats, codes and puzzles

When I was a child someone told me the following problem:

A king promised to marry his daughter to the most intelligent man. Three princes came to claim her hand and he tryed the following logic experiment with them: The princes are gathered into a room and seated in a line, one behind the other, and are shown 2 black hats and 3 white hats. They are blindfolded, and 1 hat is placed on each of their heads, with the remaining hats hidden in a different room. The first one to deduce his hat color will marry the princess. If some prince claims his hat color incorrectly he dies.

The prince who is seated behind removes his blindfold, sees the two hats in front of him and says nothing. Then the prince in the middle removes his blindfold after that and he can see the hat of the prince in front of him. He also says nothing. Noticing the other princes said nothing, the prince seated in the first whole, without even removing his blindfold, gives the correct answer? The question is: what is the color he said?

This is a simple logical puzzle: we just write all the possibilities and start ruling them out given that the other princes didn’t answer and in the end we can find the color of his hat. I remember that this puzzle surprised me a lot as a kid. A found it extremly cool by then, what made me want to read books about logic problems. After that, I had a lot of fun reading the books by Raymond Smullyan. I usually would read the problems, think something like: there can’t ba a solution to that. Then go to school with the problem in mind and spend the day thinking about that. Here is a problem I liked a lot:

There is one prisoner and there are two doors: each has one guardian. One door leads to an exit and one door leads to death. The prisioner can choose one door to open. One guardian speaks only the truth and one guardian always lies. But you don’t know which door is which, which guardian is which and who guards each door. You are allowed to choose one guardian and make him one Yes/No question, and then you need to choose a door. What is the right question to ask?

But my goal is not to talk about logic puzzles, but about Hat problems. There are a lot of variations of the problems above: in all of them a person is allowed to see the other hats but not his own hat and we need to “guess” which is the color of our hat. If we think carefully, we will see that this is a very general kind of problem in computer science: (i) the whole goal of learning theory is to predict one thing from a lot of other things you observe; (ii) in error correcting code, we should guess one bit from all the others, or from some subset of the others; (iii) in universal truthful mechanisms, we need to make a price offer to one player that just depends on all other players bids. I’ll come back to this example in a later post, since it is what made me interested in those kinds of problems, but for now, let’s look at one puzzle I was told about by David Malec at EC’09:

There are black and white hats and

people: for each of them we choose one color independently at random with probability

. Now, they can look at each others hats but not at their own hat. Then they need to write in a piece of paper either “PASS” or one color. If all pass or if someone has a wrong color, the whole team loses (this is a team game) and if at lest one person gets the color right and no one gets wrong, the whole team wins. Create a strategy for the team to win with

probability.

To win with probability is easy: one person will always write “BLACK” and the others “PASS”. A better strategy is the following: if one person sees two hats of equal color, he writes the opposite color, otherwise, he passes. It is easy to see the team wins except in the case where all hats are the same color, what happens with

probability. We would like to extend this to a more general setting:

There are black and white hats and

people: for each of them we choose one color independently at random with probability

. Now, they can look at each others hats but not at their own hat. Then they need to write in a piece of paper either “PASS” or one color. If all pass or if someone has a wrong color, the whole team loses (this is a team game) and if at lest one person gets the color right and no one gets wrong, the whole team wins. Create a strategy for the team to win with

probability.

It is a tricky question on how to extend the above solution in that case. A detailed solution can be found here. The idea is quite ingenious, so I’ll sketch here. It envolves Error Correcting Code, in that case, the Hamming Code. Let with sum and product modulo

. Let

be the non-zero vector of

and the following linear map:

Let be the kernel of that application. Then, it is not hard to see that

is a partition of

and also that because of that fact, for each

either

or exists a unique

s.t.

. This gives an algorithm for just one player to guess his correct color. Let

be the color vector of the hats. Player

sees this vector as:

which can be or

. The strategy is: if either one of those vector is in

, write the color corresponding to the other vector. If both are out of

, pass. The team wins iff

, what happens with

probability. Is is an easy and fun exercise to prove those facts. Or you can refer to the solution I just wrote.

Now, we can complicate it a bit more: we can add other colors and other distributions. But I wanted to move to a different problem: the paper Derandomization of Auctions showed me an impressive thing: we can use coding theory to derandomize algorithms. To illustrate their ideas, they propose the following problem:

Color guessing problem: There are

people wearing hats of

different colors. If each person can see everyone else’s hats but not his or her own. Each person needs to guess the color of his own hat. We want a deterministic guessing algorithm that

fraction of each color class is guessed correctly.

The problem is very easy if we have a source of random bits. Each person guesses some color at random. It seems very complicated to do that without random bits. Surprisingly, we will solve that using a flow computation:

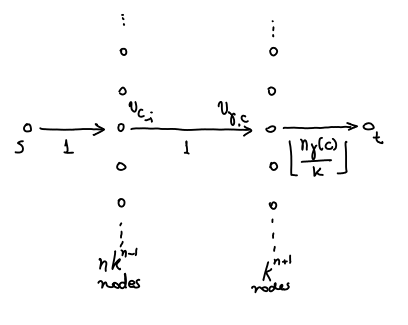

Let be an array of colors

the array with color

removed. Consider the following flow network: nodes

and

(source and sink), nodes

for each

. There are

such nodes. Consider also nodes in the form

where

is a color (

) and

is a color vector. There are

such nodes.

We have edges from to

for all nodes of that kind. And we have edges from

to

. Now, if

, i.e., if

completed in the

-th coordinate with

generates

, then add an edge from

to

.

Consider the following flow: add unit of flow from

to

and from

split that flow in pieces of size

and send each to

for

. Now, each node

receives

flow, where

is the number of occurencies of

in

. Send all that flow to

.

We can think of that flow as the guessing procedure. When we see we choose the guess independently at random and this way, each

receives in expectation

guesses

. Notice that an integral flow in that graph represents a deterministic guessing procedure: so all we need is an integral flow so that the flow from

to

is

. The flow received is from nodes of the type:

and that means that bidder

in

, looking at the other hats will correctly choose

,

times.

Now, define the capacities this way: for all edges from to

and from

to

have capacity

and from

to

capacity

. There is an integral flow that saturates all edges from

to

, because of the fractional flow showed. So, the solution gives us a deterministic decision procedure.

In the next blog post, I’ll try to show the result in the Derandomization of Auctions that relates that to competitive auctions.