Mechanism Design

In the early stage of algorithm design, the goal was to solve exacly and efficiently the combinatorial problems. Cook’s Theorem and the NP-completeness theory showed us that some problems are inehently hard. How can we solve this problem? By trying to find approximated solutions or by trying to look at restricted instances of those problems that are tractable. It turns out that the lack of computational power (NP-hardness in some sense) is not the only obstracle to solving problems optimally. Online algorithms propose a model where the difficulty is due to lack of information. The algorithm must take some decisions before seeing the entire input. Again, in most of the cases it is hopeless to get an optimal solution (the solution we could get if we knew the input ahead of time) and our goal is not to be far from it. Other example of natural limitation are streaming algorithms, where you should solve a certain problem with limited memory. Imagine for example one algorithm that runs in a router, that received gigabits each second. It is impossible to store all the information, and yet, we want to process this very very large input and give an anwer at some point.

An additional model is inspired in economy: there are a bunch of agents which have part of the input to the algorithm and they are interested in the solution, say, they have a certain value for each final outcome. Now, they will release their part of the input. The may lie about it to manipulate the final result according to their own interest. How to prevent that? We need to augment the algorithm with some economic incentives to make sure they don’t harm the final solution too much. We need to care about two things now: we still want a solution not to far from the optimal, but we also need to provide incentives. Such algorithm with incentives is called a mechanism and this represents one important field in Algorithmic Game Theory called Mechanism Design.

The simplest setting where this can occur is in a matching problem. Suppose there are people and one item. I want to decide which person will get the item. Person

has a value of

of the item. Then I ask people their values and give the item to the person that has a higher value and this way I maximize the total cost of the matching. But wait, this is a not a mechanism yet. People don’t have incentives to play truthfully in this game. They may want to report higher valuations to get the item. The natural solution is to charge some payment

from whoever gets the item. But how much to charge? The solution is the Vickrey auction, where we charge the second highest bid to the winner. More about Vickrey auction and some other examples of truthful mechanisms can be found in the Algorithmic Game Theory book, which can be found in Tim Roughgarden’s website.

One of the most famous early papers in this are is the “Optimal Design Auction” by Myerson where he discusses auctions mechanisms that maximize the profit for the seller. After reading some papers in this area, I thought I should return and read the original paper by Myerson, and it was indeed a good idea, since the paper formalizes (or makes very explicit) a lot of central concept in this area. I wanted to blog about the two main tools in mechanism design discussed in the paper: the revelation principle and the revenue equivalence theorem.

Revelation Principle

We could imagine thousands of possible ways of providing economic incentives. It seems a difficult task to look at all possible mechanisms and choose the best one. The good thing is: we don’t need to look at all kinds of mechanisms: we can look only at truthful revelation mechanisms. Let’s formalize that: consider bidders and each has value

for getting what they want (suppose they are single parameter agents, i.e., they get

if they get what they want or

if they don’t). We can represent the final outcome as a vector

. Here we consider also randomizes mechanisms, where the final outcome can be, for example, allocate the item to bidder

with probability

. This vector has some restrictions imposed by the structure of the problem, i.e., if there is only one item, then we must have

. In the end of the mechanism, each player will pay some amount to the mechanism, say

. Then at the end, player

has utility:

and the total profit of the auctioneer is

.

Let’s describe a general mechanism: in this mechanism each player has a set of strategies, say . Each player chooses one strategy

and the mechanism chooses one allocation and one payment function based on the strategies of the players.

and

. Notice it includes even complicated multi-round strategies: in this case, the strategy space

would be a very complicated description of what a player would do for each outcome of each round.

Let be the set of the possible valuations a player would have. So, given the mechanism, each player would pick a function

, i.e.,

is the strategy he would pick if he observed his value was

. This mechanism has an equilibrium if there is a set of such functions that are in an equilibrium, i.e., no one would change his function and be better off. If those exist, we can implement a this as a direct revelation mechanism. A direct revelation mechanism is a mechanism where the strategies are simply to reveal a value in

. So, I just ask each player his valuation.

Given a complicated mechanism ,

and equilibrium strategies

, I can implement this as a direct revelation mechanism just by taking:

It is not hard to see that if the mechanism is , for the players the best thing to do is to reveal directly their true valuation, eliminating all the complicated steps in between. One practical example of a direct revelation mechanism is the Vickrey auction. Consider the English auction, which is the famous auction that happens in most of movied depicting auctions (or Sotheby’s for a clear example): there is one item and people keep raising their bids untill everyone else drops and the last person still biddings gets the item. The clear strategy in those auctions is to raise your bid as long as the current value is below your valuation

and there still other bidders that haven’t dropped the auction. Clearly the person with highest value

will get the item. Let

be the second highest value. It is not hard to see that all but one will drop the auction after

, so the highest bidders is likely to pay

. This is exacly the Vickrey auction, where we just emulate the English as a direct revelation mechanism. There are of course other issues. The following quote I got from this paper:

“God help us if we ever take the theater out of the auction business or anything else. It would be an awfully boring world.” (A. Alfred Taubman, Chairman, Sotheby’s Galleries)

So, we can restrict our attention to mechanims in the form and

that are truthful, i.e., where the players have no incentives not to report their true valuation. We can characterize truthful auctions using the next theorem. Just a bit of notation before: let

,

, … and for

, let

be the joint probability distribution and

be the joint probability distribution over

:

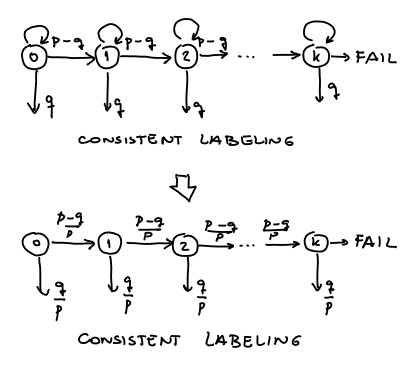

Theorem 1 An auction is truthful if and only if, for all possible probability distributions over values given by

, …,

we have

is monotone non-decreasing

where

Revenue Equivalence

The second main tool to reason about mechanisms concerns the revenue of the mechanism: it is Myerson’s Revnue Equivalence Principle, which roughly says that the revenue under a truthful mechanism depends only on the allocation and not on the payment function. This is somehow expected by the last theorem, since we showed that when a mechanism is truthtful, the payments are totally dependent on .

The profit of the auctioneer is given by . We can substitute

by the payment formula in last theorem obtaining:

We can invert the order of the integration in the second part, getting:

So, we can rewrite profit as:

And that proves the following result:

Theorem 2 The seller’s expected utility from a truthful direct revelation mechanism depends only on the assignment function

.

Now, to implement a revenue-maximizing mechanism we just need to find that optimize the profit functional above still meeting the truthfulness constraints in the first theorem. This is discussed by Myerson in his paper. There is just one issue here: our analysis is dependent on the probabilities

. There are various approaches to that:

- Assume that the values of the bidders are drawn from distribution and

and given to them. The distributions are public knowledge but the realization of

is just known to bidder

.

- Bidders have fixed values

(i.e.,

are the Dirac distribution concentrated on

) and in this case, the revenue maximizing problem becomes trivial. It is still an interesting problem in the point of view of truthfulness. But in this case, we should assume that

is fixed but just player

knows its value.

- The distributions exist but they are unknown by the mechanism designer. In this case, he wants to design a mechanism that provided good profit guaranteed against all possible distributions. The profit guarantees need to be established accourding to some benchmark. This is called prior-free mechanism design.

More references about Mechanism Design can be found in these lectures by Jason Harline, in the original Myerson paper or in the Algorithmic Game Theory book.

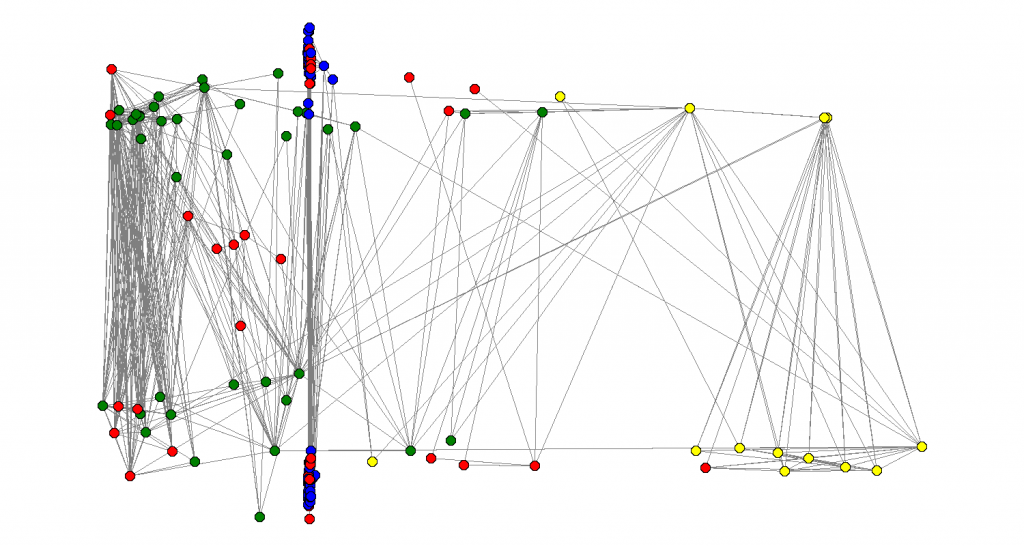

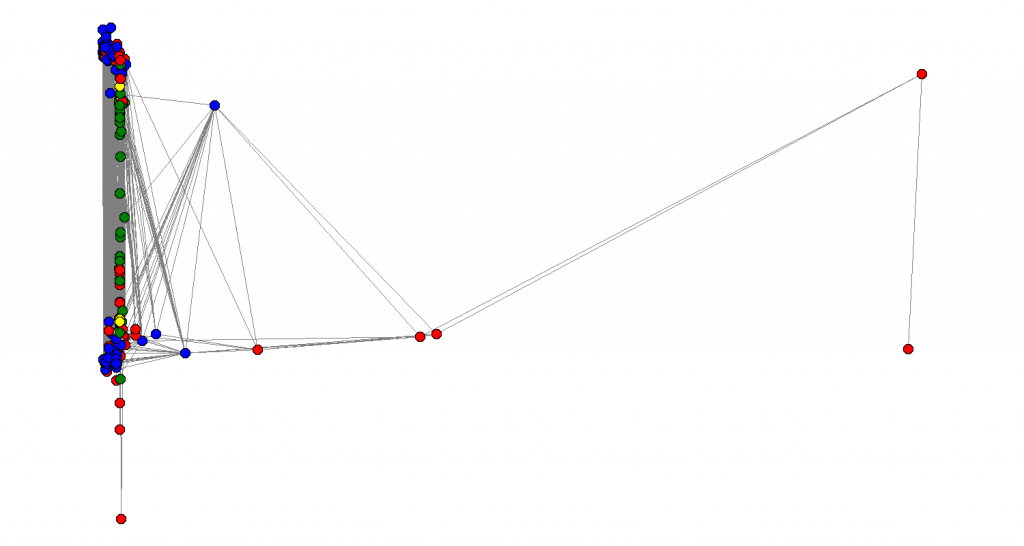

Also, I looked at

Also, I looked at  See how all the nodes that were somehow “important” in the last two graphs all collapse to the same place and now we are trying to sort the other nodes. How far can we go? Is there some clean recursive way of extracting multiple (possible overlapping) communities in those large graphs just looking in the local neighborhood? I haven’t reviewed much of the literature yet.

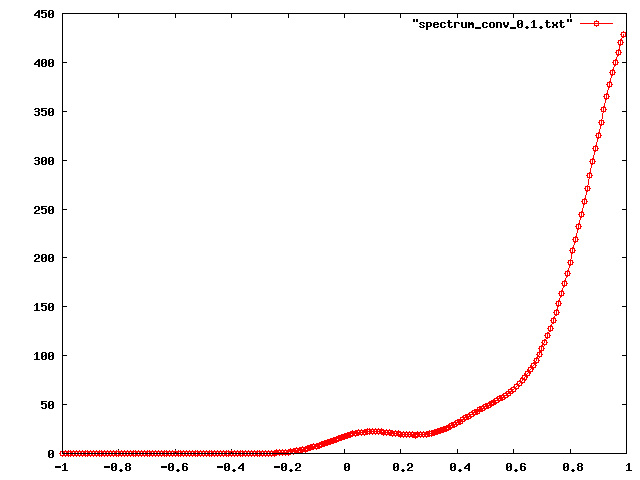

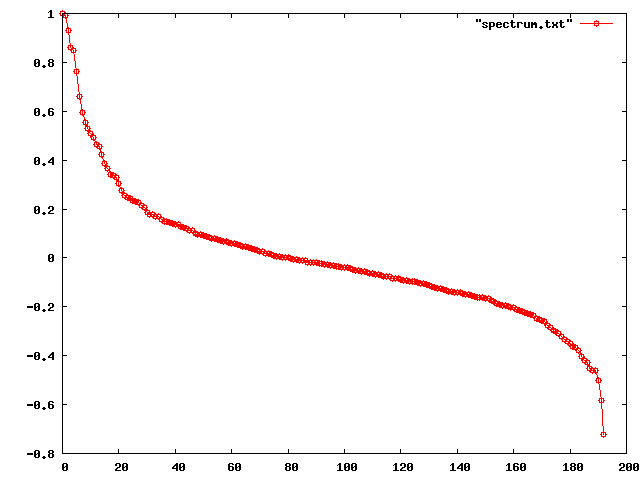

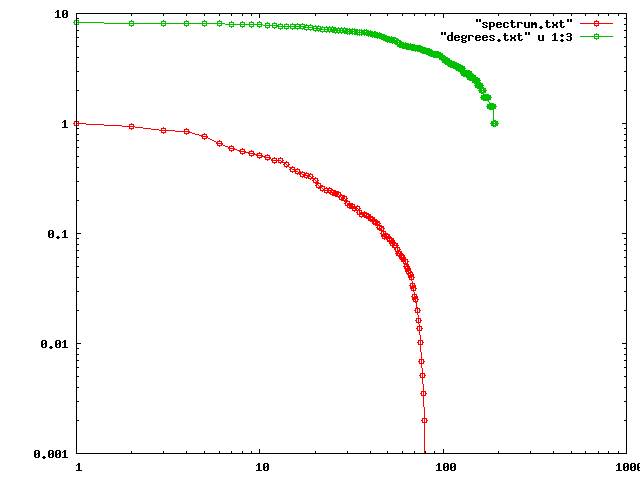

See how all the nodes that were somehow “important” in the last two graphs all collapse to the same place and now we are trying to sort the other nodes. How far can we go? Is there some clean recursive way of extracting multiple (possible overlapping) communities in those large graphs just looking in the local neighborhood? I haven’t reviewed much of the literature yet. Most of the eigenvalues are accumulated on the positive side (this graph really seems far from being bipartite). Another interesting question if: how can we interpret the shape of this curve. Which characteristics from the graph can we get? I still don’t know how to answer this questions, but I think

Most of the eigenvalues are accumulated on the positive side (this graph really seems far from being bipartite). Another interesting question if: how can we interpret the shape of this curve. Which characteristics from the graph can we get? I still don’t know how to answer this questions, but I think  This seems like a nice symmetric pattern and there should be a reason for that. I don’t know in what extent this is a property of real world graphs or from graphs in general. There is

This seems like a nice symmetric pattern and there should be a reason for that. I don’t know in what extent this is a property of real world graphs or from graphs in general. There is  Even though they are not a power-law, they still seem to have a similar shape, which I can’t exacly explain right now. Papadimitrou suggests that the fact that

Even though they are not a power-law, they still seem to have a similar shape, which I can’t exacly explain right now. Papadimitrou suggests that the fact that